My head is full of questions today. On the one hand, I need to get some front end evaluation data on young people and mobile gaming together, in just a month, so I’m composing an online survey about that.

On the other hand it is the deadline for Bodiam Castle to submit bespoke questions for the National Trust’s visitor survey, so I need to get my head around what questions to try and persuade them to add. It can’t be everything that I’ll eventually ask on site, because the National Trust visitor survey is already pretty long. The most obvious one is did the visitor actually do (what I’m currently calling) “the thing” (because I don’t yet know what they’ve decided to call it)?

With my third hand (if only) I need to crack on the with composing the interview questions for my planned research into the relationships between tech companies and heritage organisations…

But I’m going to leave that and Bodiam to one side for a moment and concentrate on the other survey. I need to ask about the target audience’s social media use, but before I do that, I ought to review what we already know. And I know very little. I hear from the papers that Facebook use is on the decline among young people because all us oldies are spoiling their fun. To which I want to say “It was always meant to be for us oldies anyhow, to keep in touch with our University friends as we got older and drifted apart. Your place, my young chums, was meant to be MySpace, but like a teenager’s bedroom you let it get messier and messier before you moved out.”

But actually my 12 year old is counting down the days to her birthday when she’ll be able to comply with Facebook’s terms and conditions and open an account (which all her friends with more relaxed parents have apparently already done). So it seems there’s life in the old network yet. My first point of call of course was to ask her what “the young people” were using nowadays, but she didn’t say anything that was new to me. And actually she’s a bit younger than my target market, so I had better turn to some published data.

The Pew Research Center tells me that 90% of all internet users aged 18-29 (which is pretty close to my target market) in the US (which is not) use Social Media. They also report proportions of the the 18-29 age band using particular social platforms. In 2013 they asked 267 internet users in that age band about what they used:

84% used Facebook

31% used Twitter

37% Instagram

27% Pintrest, and

15% LinkedIn.

I think its interesting that there’s such a steep difference between Facebook and the also-rans. The curve leaves very little room for other networks like Foursquare.

Meanwhile the Oxford Internet Surveys show us that use of social media is begging to plataux at around 61 % of internet users generally. They also show us that Social Network use gets less the older the respondent is, with 94% of 14-17 year olds using networks, dropping t0 the mid-80% (the graph isn’t that clear) for 18-24 year olds.

The full report of their 2013 survey concentrate on defining five internet “cultures” among users.

Although they overlap in some respects these cultures define distinctive patterns. While these cultural patterns are not a simple surrogate for the demographic and social characteristics of individuals, they are socially distributed in ways that are far from random. Younger people and students are more likely to be e-mersives, but unlike the digital native thesis, for example, we find most young students falling into other cultural categories.

The group of young people that I’m interested in here falls especially into two of those cultures: The e-mersive and the cyber-savvy. Both of which might be worth looking at in more detail later. What I can see now, though, is that these two groups are the most likely to post original creative work on-line (rather than simply re-post what others have created. Interestingly, between the 2011 and 2013 surveys, the proportion of users putting creative stuff online has dipped a little, except for photographs. I guess that may be the Instagram effect. In fact the top five Social Network activities recorded in the survey are updating status; posting pictures; checking/changing privacy settings; clicking though to other websites; and leaving comments on someone else’s content.

Its an interesting report, but nothing novel comes out of it about young people’s use of the social networks. That should be reassuring I suppose, but it doesn’t particularly inform our front-end evaluation for a mobile game based around the Southampton Plot. So we’re going to have to ask young people themselves.

How to we ask, first of all, what sort of games they are playing? There are too many to list, so I’m toying with a “dummy” question that simplying gets respondents into the mood, by asking about a relatively random selection of games, but trying to include sandbox games like Minecraft, story games like Skyrim, MMORPGs like World of Warcraft, social games like Just Dance, etc. (And throwing in I Love Bees, as a wild card just to see if anybody bites at the Augmented reality game that seems to be closes to our very loose vision for the Southampton Plot. But the real meat is a free-text question that simply asks what is their favourite game that they’ve been playing recently.

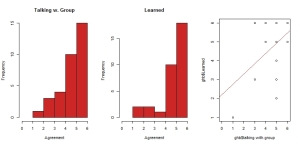

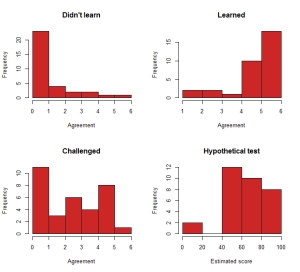

My next thought has a bit more “science” behind it. Inspired by the simple typology put together by Nicole Lazzaro, I’ve taken seventeen statements her researched players used to illustrate the four types of fun she describes, and asked respondents to indicate how much they agree with them. My plan is to use some clever maths to identify what sort of mix of fun our potential gamers might enjoy.

Then I plan to ask them about the social networks they use, including the top three from the OIS data (Facebook, Instagram and Twitter) but also throwing Pintrest (which the US data also highlighted) and Foursquare (which I wanted to include because it is inherently locatative (though Facebook and Instagram are too, slightly more subtly). We’ll see how much our sample matches the published data in terms of users. I’ve also asked them to name another network if they are using that and its not one of my listed ones. Just in case MySpace is making its comeback at last 🙂 or G+ is finally getting traction.

Then I’ve suggested a similar question about messaging networks, like What’sApp and Snapchat.

I have also included a question about smartphones, whether they have one, one sort (iOS, Android etc) it is. And I’ve tried to create a question about how much of their social networking is mobile vs desk (or laptop) based, but it’s the one I’m least happy about.

Finally, as we’re trying to use this game to get people to places, I’ve asked about transport: walking; cycling; public transport; catching lifts; and being about to drive themselves. We’ll see how mobile they turn out to be.